“Evaluation is the process of determining the merit, worth, and value of things, and evaluations are the products of that process” (Reiser and Dempsey, 2012).

Models for Evaluation

In our text, evaluation includes the aspects of “merit, worth and value,” three words that often vary between contexts and the opinions of others. For the purposes of taking a deeper look into evaluating a program or process, we will assume the definitions described in the book for each of these terms; merit meaning “intrinsic value,” worth meaning “market value,” and value being the concept of making judgement that is valuable in the progression and success of a corporation or business as a whole.The text goes on to describe several models for evaluating instructional design including the Context, Input, Process Prodcut (CIPP) model, Rossi’s Five Domain Evaluation Model and Kirkpatrick’s Training Evaluation Model. In addition to these models explained in the text, I found Kaufman’s Model of Learning Evaluation, based on Kirkpatrick’s previously established model, and Anderson’s Value of Learning Model to be applicable in effectively evaluating the overall success of an innovation or program.

Kaufman's Model of Learning Evaluation

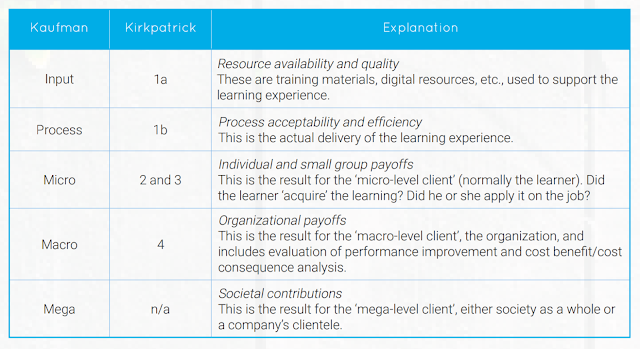

As mentioned above, Kaufman’s Model of Learning Evaluation builds on the ideas presented in Kirkpatrick’s model in the text. Kirkpatrick’s model revolves around four levels of evaluation which include learner’s reactions to the experiences (Level 1: Reaction), the actual learning and the consequential changes that occur (Level 2: Learning), behavior changes that occur while performing the job (Level 3: Behavior), and the effect of the learning and subsequent changes on the organization (Level 4: Results) (Reiser and Dempsey, 2012). In addition, Kaufman’s model emphasizes the value of these four steps while also introducing some improvements to this process that include splitting Level 1: Reaction into two elements; input and process. He further emphasizes that evaluating materials separate from the process might help to identify any problem areas or a shortage of appropriate resources sooner in the process. In return, this affords early detection leading to corrective action to be taken early in the process. The design of these two levels considers both delivery and impact, which contradicts Kirkpatrick’s earlier model as it combines the evaluation of these two elements. Secondly, Kaufman adds a fifth level to look at benefits to society and clients. While evaluating clients, customers or consumers of the product/innovation/program proves beneficial, looking at the effect on society can be challenging, cumbersome and result in insufficient data. Overall, both Kirkpatrick’s and Kaufman’s models were designed to evaluate more formal approaches to instruction delivery and training. To help you better visualize the formality of Kaufman’s model, I have provided a list of the steps below and how they correlate to Kirkpatrick’s original model.

Downes, Andrew (2017). Kaufman Kirkpatrick Explanation. Learning Evaluation Theory: Kaufmann’s Five Levels of Evaluation. Franklin, TN: WatershedLRS.com.

Anderson's Value of Learning Model

Another alternative model to those presented in the text, Anderson’s Value of Learning Model, also emphasizes the evaluation of the impact on the organization as mentioned in both Kirkpatrick and Kaufman’s levels. Anderson’s model consists of a three-stage evaluation cycle, as outlined below, and is intended to be applied at the organizational level as it focuses on the alignment of the learning program’s goals the goals of the organization. Finally, the Anderson model is intended to evaluate learning strategies over individual programs. Although, by design, this model is intentionally high level and flexible as used at the organizational level, this can also be seen as a limitation because it doesn’t afford practical application or specific, in-depth evaluations.

Anderson’s Value of Learning Model Phases:

- Determine current alignment against strategic priorities.

- Use a range of methods (learning function, return on expectation, return on investment, and benchmark/capacity measures) to assess and evaluate the contribution of learning.

- Establish the most relevant approaches for your organization.

Evaluating Instruction with Kaufman and Anderson

Although Kaufman’s and Andersons models are designed to be formal evaluation tools implemented at the organizational level, I found that they still correlate to current professional development program practices. To apply these models in evaluating my own instruction, as a professional development presenter, I would integrate the following steps for evaluating my instruction:

- Identify intended outcomes and Evaluate how they align to district goals and initiatives.

- Determine which resources are appropriate for reaching the intended goal or outcome, locate these resources and coordinate access to them, as well as anticipate possible barriers, limitations and problems that might present themselves when utilizing these selected resources.

- Use formative assessment to discover the effectiveness and efficiency of the learning experience within the organization by developing an online platform for sharing developed products beyond the professional development experience.

- Identify organizational payoffs by surveying participants on the ease of use of the new ideas presented, how they align to curriculum requirements and whether or not they were utilized in the classroom following the learning experience.

- Provide an opportunity for participants to predict and link new methods presented to the impact they will have on society based on the learning that will be transferred to students.

More Evaluation Considerations

What I found in evaluating the models mentioned above is they don’t necessarily include unintended outcomes. This is where I feel that Return on Investment (ROI) might fail to show success. Evaluations need to incorporate opportunities for learners to share consequences and outcomes that they constructed as a result of the learning experience that may not have been anticipated by the instructor or professional development presenter in the case mentioned above. In school districts, I often find that resources are allocated with ROI in mind, yet accountability is not implemented to ensure such a return comes to fruition. A good example of this occurs when our district sends various staff members to out of district conferences and training. The district either selects or leaves it to the principals to select participants that will get the most out of the content presented, and in return, bring back new methods and strategies to the other staff members on the campus. The evaluation process fails to hold these staff members accountable for the greatest ROI, which is to provide new learning experiences across the campus or district through professional development.Human Performance

As I thought about the scenario above, and the lack of a formal accountability process, I couldn’t help but also think of the informal learning that results from these conferences for years to come. Informal learning, favored by constructivists like myself, sees the power in authentic learning that occurs at the initiative and in the context of the job. Teachers and support staff work together regularly to enhance and enrich the learning process by constantly tweaking their instructional designs. While this method of learning may be hard to measure, it is immediate, relevant and often easier to attend than formal training. Informal learning place instructors in the role of coaches and experts, providing mentors to make learning more meaningful and personal. It also shifts the responsibility of learning onto the learners (teachers) and inspires them to seek out information to improve instructional design. Technology makes this shift more feasible, continuous and collaborative. Furthermore, as new learning begins to take root, I do feel it is important to develop Knowledge Management systems to make this learning available to all. Technology redefines KM as it provides editable platforms for the storage of knowledge while also allowing the knowledge to evolve over time. On another note, I found that Performance Support Systems, while time consuming, can be useful in some cases by providing a structure of support accessible to all. However, they do not hold the same qualities of being updated easily, and can quickly become outdated and irrelevant. Overall, the use of informal learning practices and knowledge management systems when appropriate align best with the constructivist learning theory I find most teachers believe to be best.

As I thought about the scenario above, and the lack of a formal accountability process, I couldn’t help but also think of the informal learning that results from these conferences for years to come. Informal learning, favored by constructivists like myself, sees the power in authentic learning that occurs at the initiative and in the context of the job. Teachers and support staff work together regularly to enhance and enrich the learning process by constantly tweaking their instructional designs. While this method of learning may be hard to measure, it is immediate, relevant and often easier to attend than formal training. Informal learning place instructors in the role of coaches and experts, providing mentors to make learning more meaningful and personal. It also shifts the responsibility of learning onto the learners (teachers) and inspires them to seek out information to improve instructional design. Technology makes this shift more feasible, continuous and collaborative. Furthermore, as new learning begins to take root, I do feel it is important to develop Knowledge Management systems to make this learning available to all. Technology redefines KM as it provides editable platforms for the storage of knowledge while also allowing the knowledge to evolve over time. On another note, I found that Performance Support Systems, while time consuming, can be useful in some cases by providing a structure of support accessible to all. However, they do not hold the same qualities of being updated easily, and can quickly become outdated and irrelevant. Overall, the use of informal learning practices and knowledge management systems when appropriate align best with the constructivist learning theory I find most teachers believe to be best.Downes, A. (2017). Learning Evaluation Theory: Kaufman’s Five Levels of Evaluation. Retrieved June 19, 2017, from https://www.watershedlrs.com/hubfs/CO/Kaufman_White_Paper/Learning_Evaluation_Kaufman_.pdf

Downes, A. (2017). Learning Evaluation Theory: Anderson's Value of Learning Model. Retrieved June 19, 2017, from https://www.watershedlrs.com/hubfs/CO/Anderson_White_Paper/Learning_Evaluation_Anderson.pdf

Reiser, R. A., & Dempsey, J. V. (2012). Trends and issues in instructional design and technology. Boston: Pearson Education.

No comments:

Post a Comment